The move comes as global tensions rise, partly driven by past trade conflicts and ongoing economic uncertainty.

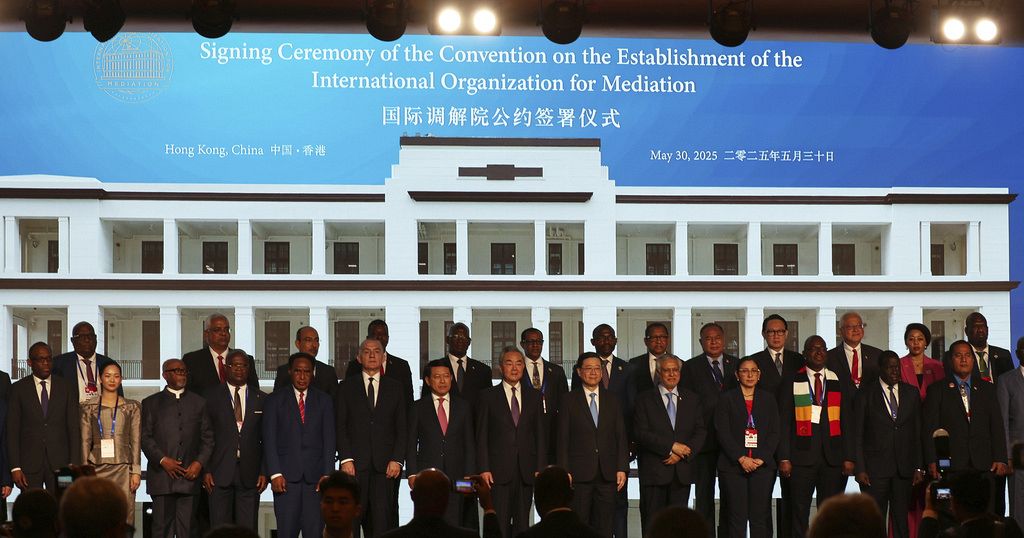

“Just now, representatives of 32 countries signed the Convention, making these countries the founding members of the IOMed. High-level representatives of more than 50 other countries and nearly 20 international organizations are also present at the ceremony. You have come here from across the world for a common goal: promoting the peaceful resolution of disputes and advancing friendship and cooperation between countries.” Wang Yi, China’s Foreign Minister said at the signing ceremony.

You must log in or register to comment.

The US is being left behind.